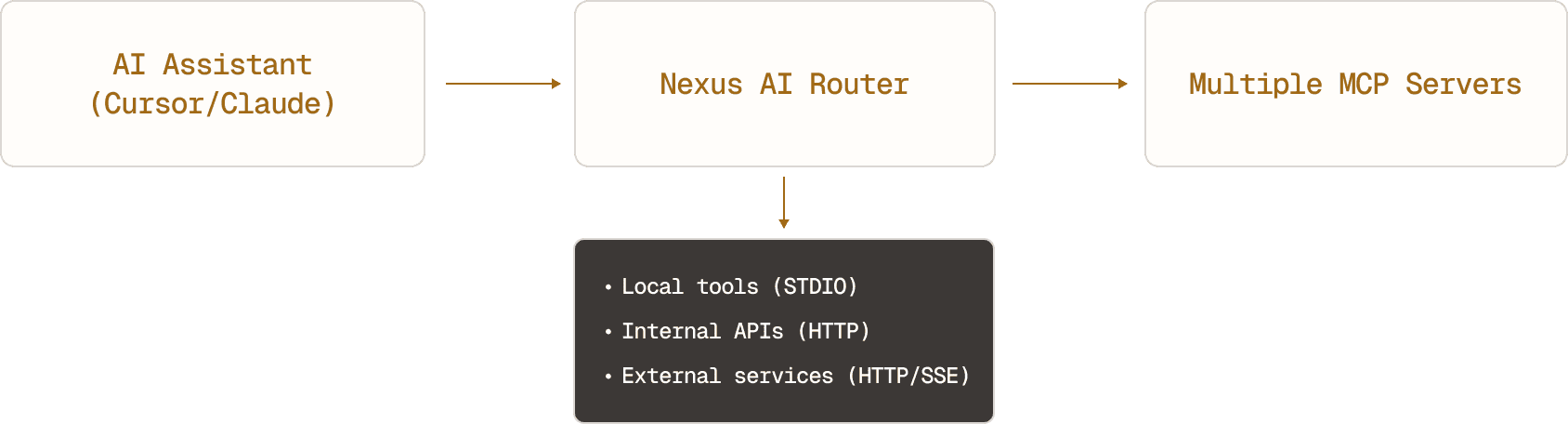

Nexus is an AI Router that provides unified endpoints for both MCP (Model Context Protocol) servers and LLM providers. It enables you to aggregate, govern, and manage your entire AI infrastructure through a single interface. It provides two main capabilities:

- Multi-provider AI model routing through a single OpenAI or Anthropic compatible API

- Unified access to OpenAI, Anthropic, and Google models

- Streaming support for real-time responses across all providers

- Model discovery and consistent API interface

Instead of configuring each MCP server individually in every AI tool, Nexus provides:

- Single endpoint for all your MCP tools

- Unified authentication with OAuth2 token forwarding

- Performance optimization through intelligent connection caching

- Tool aggregation with automatic namespacing to prevent conflicts

- Enterprise-ready security with TLS and authentication options

Get Nexus working with your AI assistant in minutes:

# Using the install script

curl -fsSL https://nexusrouter.com/install | bash

# Or run it with Docker

docker run -p 8000:8000 \

-v $(pwd)/nexus.toml:/etc/nexus.toml \

ghcr.io/grafbase/nexus:stableCreate a nexus.toml file:

# LLM configuration for AI model routing

[llm]

enabled = true

# Enable protocol endpoints

[llm.protocols.openai]

enabled = true

path = "/llm/openai"

[llm.protocols.anthropic]

enabled = true

path = "/llm/anthropic"

# Configure multiple AI providers

[llm.providers.openai]

type = "openai"

api_key = "{{ env.OPENAI_API_KEY }}"

# Models must be explicitly configured

[llm.providers.openai.models.gpt-4]

[llm.providers.openai.models."gpt-4o-mini"]

[llm.providers.anthropic]

type = "anthropic"

api_key = "{{ env.ANTHROPIC_API_KEY }}"

[llm.providers.anthropic.models."claude-3-5-sonnet-20241022"]

# MCP configuration for tool integration

[mcp]

enabled = true

path = "/mcp"

# Add a simple file system server

[mcp.servers.filesystem]

cmd = ["npx", "-y", "@modelcontextprotocol/server-filesystem", "/home/user/documents"]

# Add a GitHub MCP server

[mcp.servers.github]

url = "https://api.github.com/mcp"

[mcp.servers.github.auth]

token = "{{ env.GITHUB_TOKEN }}"# Start with default settings

nexus

# Or specify a config file

nexus --config ./nexus.toml- Open Cursor Settings (Cmd+, on macOS)

- Search for "Model Context Protocol"

- Enable MCP support

- Add to the MCP server configuration:

{

"nexus": {

"transport": {

"type": "http",

"url": "http://localhost:8000/mcp"

}

}

}Nexus simplifies MCP integration by exposing just two tools to AI assistants:

search- Discover tools from all connected MCP serversexecute- Run any discovered tool

This design allows Nexus to aggregate tools from multiple servers without overwhelming the AI assistant with hundreds of individual tools.

- LLM Providers: OpenAI, Anthropic, and Google through single API

- MCP Servers: STDIO (subprocess), SSE, and HTTP protocols

- Automatic protocol detection for remote servers

- Environment variable substitution in configuration

- Natural language tool discovery across all connected servers

- Fuzzy matching for finding relevant tools quickly

- Namespaced tools prevent conflicts between servers

- Avoid context bloating

- OAuth2 authentication with JWT validation

- Token forwarding to downstream servers

- TLS configuration for secure connections

- CORS and CSRF protection

- Single binary installation

- Docker support with minimal configuration

- Health checks and monitoring endpoints

claude mcp add --transport http nexus http://localhost:8000/mcpAccess multiple AI providers through a single OpenAI-compatible API:

# List available models from all providers

curl http://localhost:8000/llm/openai/v1/models

# Chat with any model using OpenAI format

curl -X POST http://localhost:8000/llm/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"model": "anthropic/claude-3-5-sonnet-20241022",

"messages": [{"role": "user", "content": "Hello!"}]

}'Once connected, your AI assistant will see two tools:

- Use

searchto find available tools:search for "file read" - Use

executeto run them:execute filesystem__read_file with path "/home/user/documents/readme.md"

- Installation - Install Nexus using various methods

- Server Configuration - Configure the Nexus server and security settings

- LLM Configuration - Configure AI model routing through multiple providers

- MCP Configuration - Set up and manage MCP servers

- Unified AI access: Switch between OpenAI, Anthropic, and Google models seamlessly

- Tool consolidation: Multiple development tools through one MCP interface

- Local development: Test and debug both MCP servers and LLM integrations

- Centralized AI infrastructure: Shared access to multiple LLM providers and tools

- Access control: Authentication and authorization for both models and tools

- Cost optimization: Monitor and control usage across all AI resources

- AI governance: Centralized control over both LLM access and tool usage

- Security & compliance: OAuth2 authentication with token forwarding

- Scalability: Load balancing and caching for high-performance deployments

- GitHub Issues: github.com/grafbase/nexus/issues

- Documentation: You're already here!